Latent Feature Grid Compression

ABout

Software Project for my Master’s Thesis to research possibilities of compressing Scene Representation Networks with network pruning algorithms.

Despite extensive research in the field of volume data compression, modern volume visualization faces the challenge to handle both memory and network bottlenecks when visualizing high resolution volume data at a smooth visual feedback rate. Recently, compression methods that utilize neural networks have gained considerable attention in the visual computing research community. These scene representation networks (SRN), represent encoded data implicitly as a learned function and possess strong compressive capabilities by limiting the complexity of the encoding network.

Goal

This thesis proposes to increase the compressiveness of learned data representations by using pruning algorithms in combination with neural networks, thereby enabling the network to learn the best tradeoff between network size and reconstruction quality during training.

Furthermore, a frequency encoding in form of the wavelet transformation of a network feature space is proposed to enhance the pruning capabilities of dropout methods and further improve the compression quality of SRN.

Theoretical Background

Two SRN architectures are investigated:

- Neurcomp by Lu et al. proposes a compression scheme based on overfitting a deep neural network directly to arbitrary scalar input data. The network is built as a monolithic structure, where the parameters are organised in a set of fully connected layers that are connected by residual blocks. By limiting the amount of available parameters to less than the original data size, the network itself functions as an implicit compressed version of the data.

- fV-SRN by Weiss et al. trains a small network similar to NeRF but extended with a low-dimensional latent code vector to represent the original data. Since most of the memory requirements are concentrated on the latent code grid, and the network itself is quite small, this architecture significantly speeds up the reconstruction task.

Two kinds of pruning algorithms are analysed:

- Deterministic learnable masks that observe the network parameters (e.g. Smallify by Leclerc et al).

- Probalistic dropout layers that omit each neuron with a specific stochastic dropout probability (e.g. Variational Dropout by Kingma et al).

Results

All experiments are performed on the datasets at the top of the website. The fv-SRN, as well as Neurcomp are able to outperform state of the art compression algorithms, like TTHRESH., which indicates that further research and development in refining learning based compression approaches is justified.

The pruning algorithms work best when applied on large, open networks, where singular nodes have relatively low influence on the reconstruction quality. In this manner the Neurcomp architecture is held back by its dependence on the residual blocks. While these blocks provide stability to the deeper network architecture, they also require the input and output dimension of the blocks to be of the same size, thereby effectively halving the amount of prunable parameters in the network.

For fV-SRN, on the other hand, pruning is performed on the whole latent feature grid and the pruned network outperforms the unpruned baseline by um to 5 PSNR points.

The wavelet transformation is able to further enhance the effectiveness of the pruning algorithms. This is because most of the latent feature information of the fV-SRN feature grid are encoded into just a few wavelet coefficients, enabling the pruning algorithms to easily distinguish between important and unimportant parameters.

For a more extensive review of the methods and experiments, please refer to the pdf of the Master’s thesis.

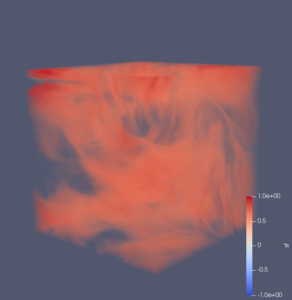

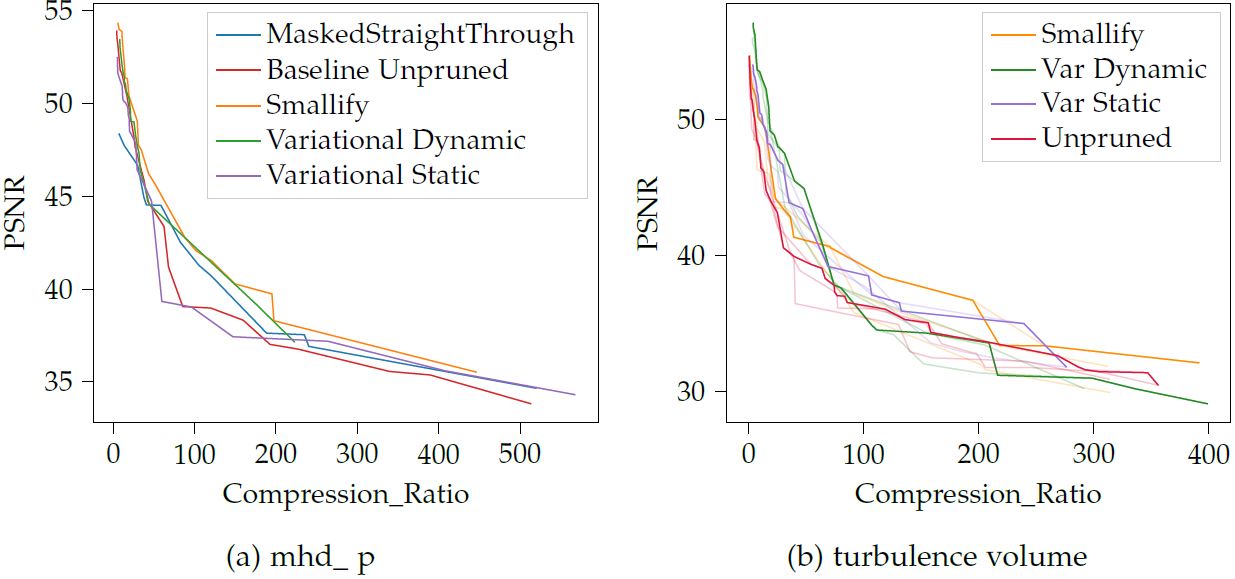

Analysis of compression quality of Neurcomp with and without pruning. Network architecture search is performed to generate good parameterizations for the network and pruning algorithms. The pareto frontier of this search is then plotted.

Despite the fact that the pruning algorithms can surpass the unpruned baseline, it is not possible to make a conclusive evaluation on whether pruning is advantageous for Neurcomp due to the significant variance in the data and the relatively minor quality improvements.

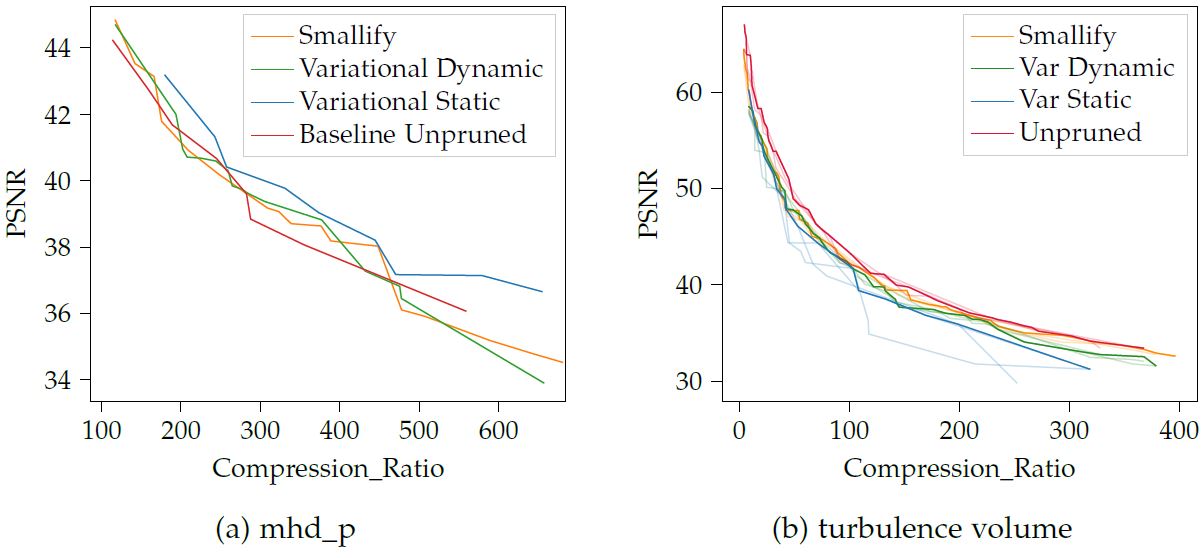

Analysis of compression quality of fV-SRN with and without pruning. Network architecture search is performed to generate good parameterizations for the network and pruning algorithms. The pareto frontier of this search is then plotted.

Although significant variance is present in the data, the pruning algorithms are effective at increasing the compression quality and consistently outperform the unpruned baseline.

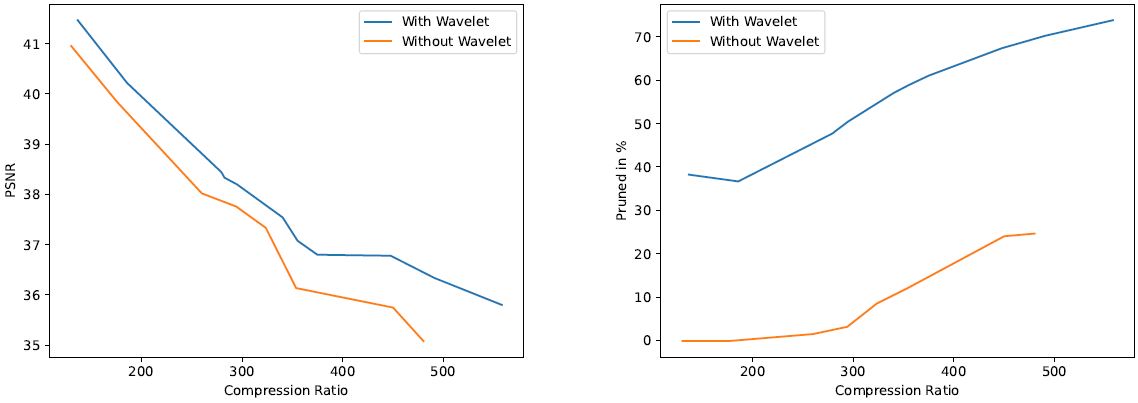

Comparison of pruning effectiveness on the fv-SRN, when the feature grid is represented in the frequency domain or spatial (without wavelets). Here, variational dropout in regard to its overall quality-compression ratio (left figure) and amount of pruning performed (right figure).

In the time-frequency domain of the wavelet transformation, most information of the original feature grid is summed up in a few wavelet coefficients, enabling the pruning algorithms to more efficiently distinguish between important and unimportant parameters to the reconstruction task. This results in significantly higher pruning and also higher compression-quality ratio.